Open access to research data promises to generate new knowledge more quickly. A comprehensible quality of the data is a central prerequisite for its use beyond the original purpose. On behalf of SWITCH, SATW surveyed experts in Switzerland as to which aspects and measures are particularly important in this respect.

Regardless of the field of application, high quality is usually a basic condition for further evaluation of data and reliable predictions. To ensure that data can be reused beyond their original purpose, its quality should be documented in a comprehensible manner. This is particularly important in research, so that the great potential of Open Science can be fully exploited. What criteria are particularly relevant for comprehensible data quality and what measures are taken to ensure this? To answer this questions, SATW has interviewed experts from nationally relevant research domains, industry or the service sector within the framework of a SWITCH Innovation Lab. The results are published in a short report.

Research data are very heterogeneous and project-specific. Depending on the application, different requirements are imposed on their quality. Researchers are responsible for data quality (DQ) by complying with scientific standards and guidelines. From the point of view of the interviewees, DQ has a very high priority. However, achieving the highest quality can usually only be achieved with major effort. Therefore, especially in the business world, often quality levels are defined. Unclear framework conditions in connection with DQ can also lead to additional expenditure. The general trend in research is to focus more and earlier on DQ, for example through demands from research funding organisations such as the Swiss National Science Foundation (SNSF).

For a comprehensible DQ, various aspects have to be considered. Their relevance depends on the problem under consideration. The interviewees most frequently mentioned accessibility to data as an important prerequisite for a comprehensible DQ. In research, however, only a fraction of the data produced is currently accessible and there is often a lack of transparency of the available information. In order to publish data, a lot of time must also be spent on documentation.

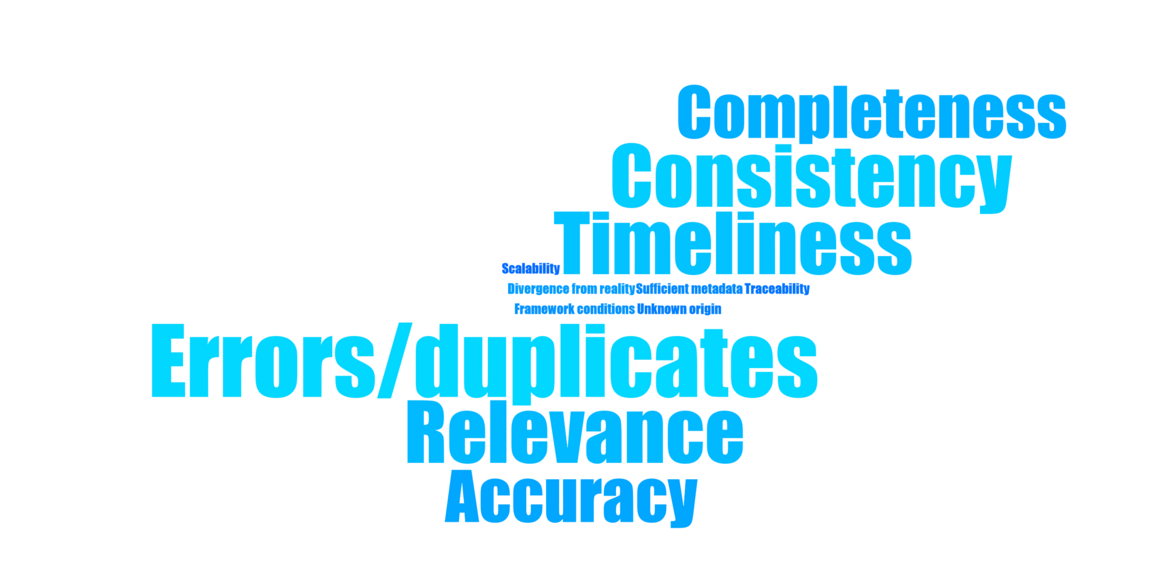

Other fundamental aspects for DQ are authenticity, integrity and indisputability. However, these are often only sparsely checked. Processes for ensuring DQ, such as the development and implementation of data governance or quality rules, pose major challenges in data management. Where possible, such processes should be automated. Incorrect data and duplicates, timeliness, consistency and relevance are the specific challenges in connection with DQ that were most frequently mentioned by the experts. Data describes reality only approximately. This results in a uncertainty in their evaluation, which should already be taken into account in the data collection. Ensuring that the data is up-to-date is often difficult and costly.

Data administrators follow the guidelines and specifications of the respective projects and domains. Standards vary depending on whether data is generated in research projects, in administration or in industry. Generally accepted standards for data management have not yet been established. If data is not collected according to standardised procedures, it is hardly usable for third parties. Researchers usually publish only aggregated data in which the original information is no longer fully available. The reuse of such data is a challenge, as it is not always clear how the aggregation was carried out. An obligation to publish raw data from research projects generally has a positive effect on DQ. Researchers are thus aware from the outset that others can use their data and, if necessary, verify it. This improves documentation and care in handling data. After all, nobody in research wants to be criticised for having botched data.

The FAIR principles are guidelines for improving the findability, accessibility, interoperability and reusability of digital assets. Most of the respondents stated that they were aware of them and were implementing them as well as possible. However, the principles are often neglected due to a lack of time. Business and statistical offices seem to be more advanced in organising and maintaining data and could serve as a model for research in this respect.

Due to the increasing dissemination of Open Access and Open Data, the availability of research data has been steadily increasing for several years. However, many researchers have so far only worked with their own data, those of companies or public statistics. The linking and re-use of research data would make their use more productive. In addition, this can increase the visibility of the researchers' own research, allowing them to benefit from the focus, competencies and assignments of their colleagues. However, research data is documented in very different ways and their correct interpretation can be a challenge. The context of the primary data collection should be documented in metadata. The use of external data requires confidence in their quality and in the corresponding data suppliers. Their sensitivity to DQ is central. If the accessibility of data is transparent, this increases trust: Frequent use by many different users offers good protection and is an indication of high DQ.

There are numerous initiatives in the field of Open Science and Open Data. The success of these will largely depend on whether and how well the aspects of comprehensible data quality are taken into account. In a pilot phase for a Swiss research data connectome, SWITCH and its partners intend to validate use cases and a possible architecture. The searchability of research data will be a central issue. The SATW will use its best efforts to ensure that the data quality is documented as comprehensibly as possible from the very beginning. To achieve this, it must be clarified to what extent uniform framework conditions for research data can be developed and which processes can be automated to ensure DQ in order to support and relieve researchers.

Manuel Kugler, Head Priority programme Advanced Manufacturing and Artificial Intelligence, Phone +41 44 226 50 21, manuel.kugler@satw.ch