Translated with DeepL

Automated vehicles have been a much-discussed and promising topic in specialist circles for several years. Accordingly, numerous national and international expert committees are working intensively to not only enable the safe use of such vehicles on the road, but also to ensure this as far as possible.

This work is hardly recognised by the public. This is probably because the media consider them to be of too little interest to the general public[1]. Instead, speculative considerations tend to take centre stage in the media on the topic of automated driving.

In some cases, a rather futuristic - or even eschatological - attitude is adopted, recognising in the automated vehicle the harbinger of a progressive replacement of humans by machines. This is because supposedly "self-driving" vehicles using "artificial" intelligence[2] utilise a highly developed combination of hardware and software to control vehicles and make drivers superfluous. This view is primarily fuelled by two factors:

Much too optimistic marketing promises about the technical capabilities of automated vehicles and dubious conceptual analogies between man and machine contribute to the fact that automated driving is often presented in the media in a distorted and unrealistic way.

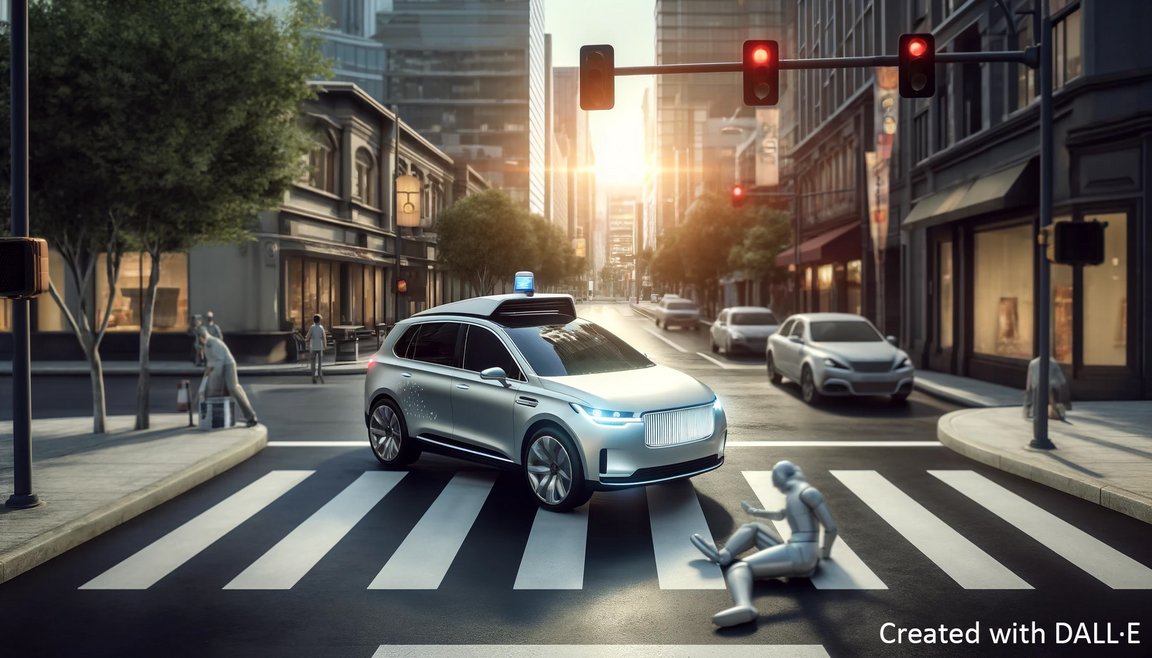

So there is hardly any information about what so-called "self-driving" vehicles can actually do technically and what they are legally allowed to do, but instead there is a preference for speculating about possible accident scenarios and their consequences. This typically centres on the question of whether a "self-driving" car should run over a first-grader at a pedestrian crossing or a retired senior citizen if it is no longer possible to stop in time. The focus is usually on an ethical perspective.

What should we make of such media reports and to what extent do they contribute to a better social understanding of the advantages and disadvantages of automated driving? The answer to this question may be inferred from the following observations and comments:

However, this is not a novelty. Our society has long accepted nolens volens that, for example, a suicidal pilot cannot be held responsible for the deaths of passengers in plane crashes.

Accidental deaths without criminal sanctions are unavoidable in very rare situations. Every technology harbours certain risks, and this also applies to automated driving. Anyone who places idealistically high demands and expectations on automated vehicles therefore runs the risk of missing the "train to the future" of automated driving.

[1] However, the assumption that the public is unlikely to be interested in the safety of motor vehicles seems doubtful, as road accidents are the subject of media coverage practically every day.

[2] The question of how meaningful this term actually is will not be explored further at this point.

[3] Depending on the circumstances, these human qualities are understood to be merely "emulated" or "simulated" in so-called "intelligent" systems.

1 If a person is killed or injured or property damage is caused by the operation of a motor vehicle, the owner shall be liable for the damage.

2 If a road accident is caused by a motor vehicle that is not in use, the owner is liable if the injured party proves that the owner or persons for whom he is responsible are at fault or that the motor vehicle was defective.

3 At the judge's discretion, the keeper is also liable for damage resulting from assistance rendered after accidents involving his motor vehicle, provided that he is liable for the accident or that the assistance was rendered to himself or to the occupants of his vehicle.

4 The keeper shall be liable for the fault of the driver and any assisting persons in the same way as for his own fault.

The blog posts in this series offer an interdisciplinary view of current AI developments from a technical and humanities perspective. They are the result of a recurring exchange and collaboration with Thomas Probst, Emeritus Professor of Law and Technology (UNIFR), and SATW member Roger Abächerli, Lecturer in Medical Technology (HSLU). With these monthly contributions, we endeavour to provide a factually neutral analysis of the key issues that arise in connection with the use of AI systems in various application areas. Our aim is to explain individual aspects of the AI topic in an understandable and technically sound manner without going into too much technical detail.